Objective

The goal of this lab was to use the Bayes Filter to implement grid localization on the real robot.

The goal of this lab was to use the Bayes Filter to implement grid localization on the real robot.

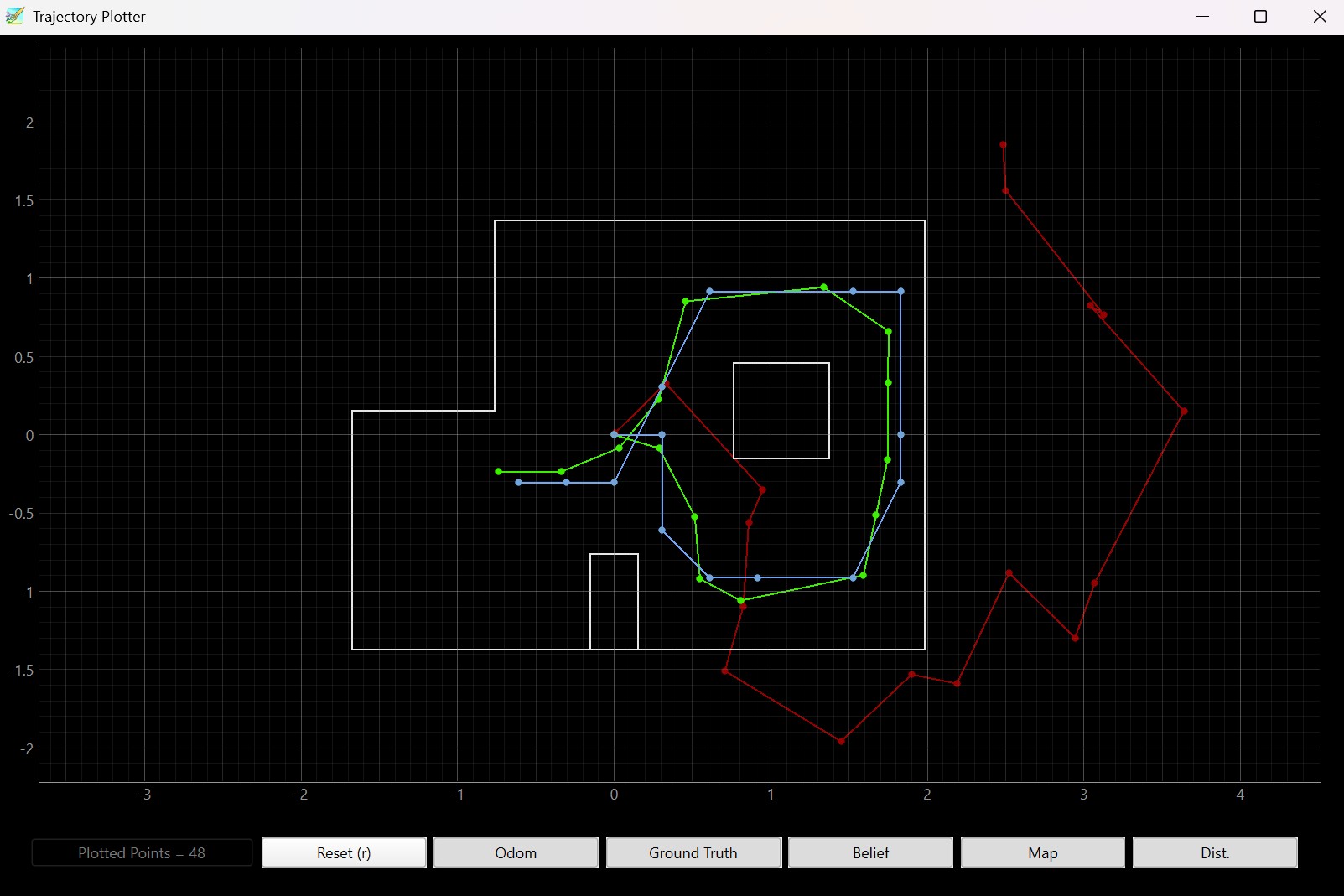

I first tested the localization on the virtual robots in simulation. The result is shown below:

The odometry, shown in red, is quite terrible. However, the localization closely follows the ground truth as expected.

To localize, the robot needs to perform an observation loop by slowly spinning 360 degrees and taking 18 evenly spaced distance measurements. To implement this, I changed my Arduino code slightly from lab 9.

I first updated my get_TOF() function so that the robot will wait until sensor data is ready before moving to its next position.

I then updated my main loop so that my control flag turns off after 18 measurements are made. I also decided to move the data transmission code to my main loop so I can avoid sending another command in Python.

I implemented the `perform_observation_loop` function like so:

I unfortunately only tested my localization on points (-3,-2) and (5,-3). For all previous labs, I recorded distance measurements in inches; however, for this lab, the distances needed to be in meters. I unforunately didn't realize my units were off until after I lost several hours to debugging this trivial issue.

Below is a video of my robot performing the observation loop:

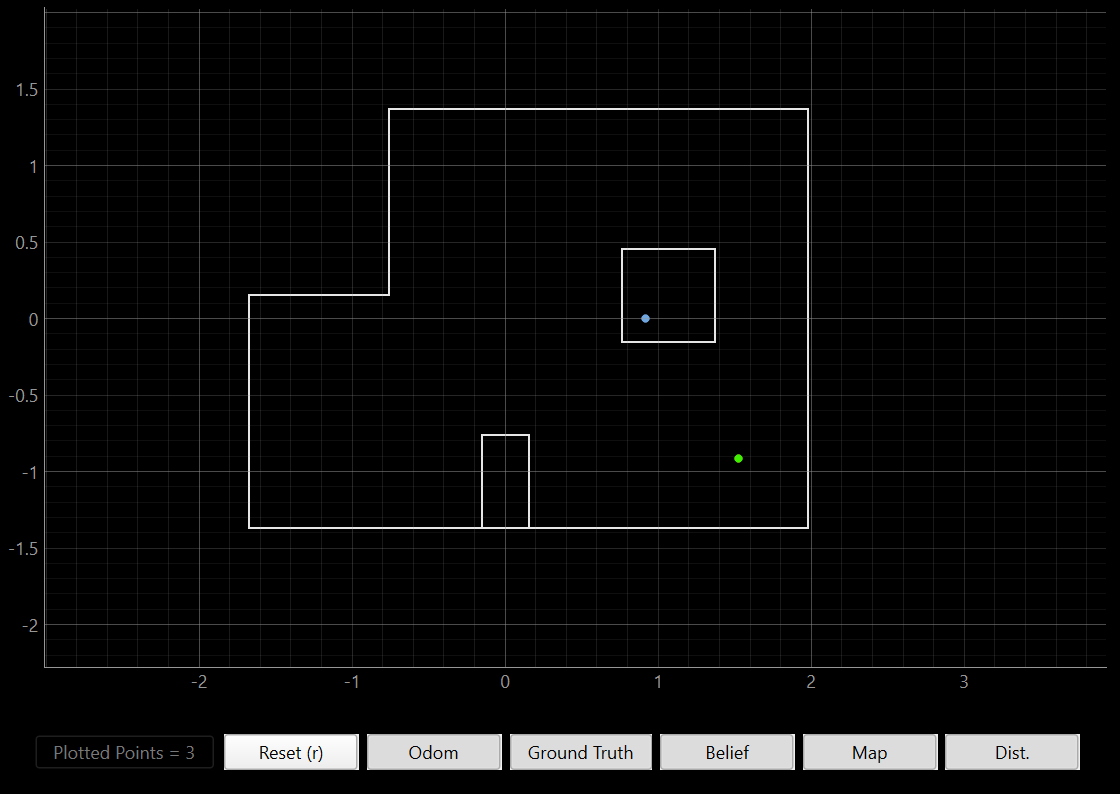

Below are the results for this observation loop. I printed `self.tofSide_dists` to check that all the data was being sent over. I then printed `sensor_ranges` and `sensor_bearings` to check that the arrays were the right shape. The localization results are shown in the lines after that.

[4/30/25] The localization results are quite awful. The distances that were getting stored in `self.tofSide_dists` matched what I expected. Also, the results of my print statements prove that my data is being sent over properly. Other than that, there wasn't much room for implementation error, since the Bayes implementation is provided. I ran out of time in lab, but I might try adjusting the sensor noise parameters to see if I can get a better localization.

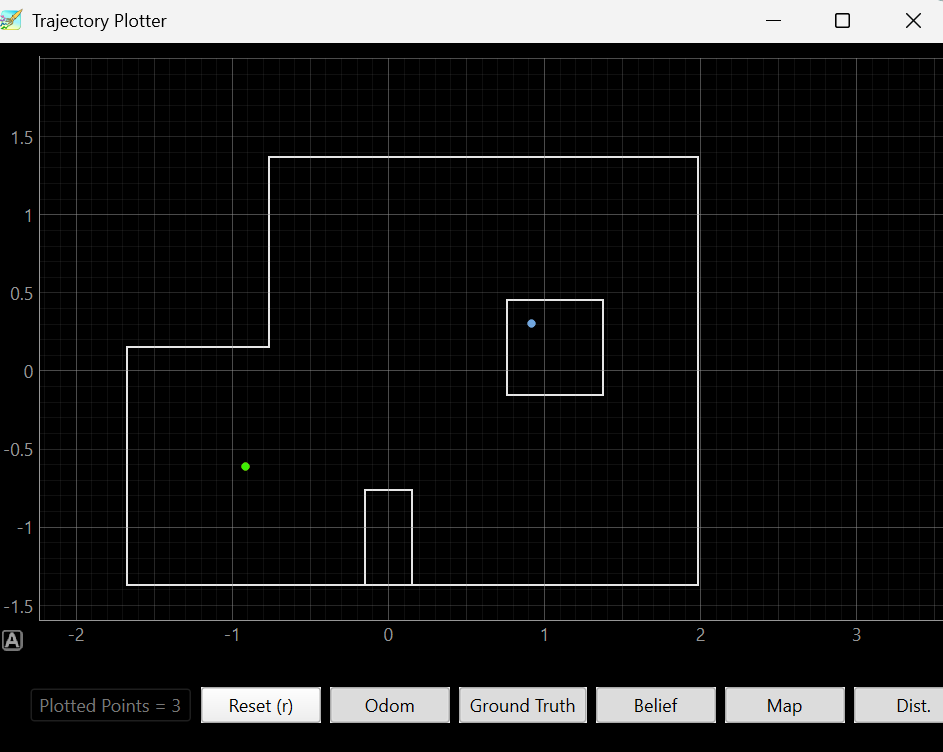

[UPDATE 5/5/25] I found some of my own implementation bugs in Arduino. The measurements I was taking corresponded to the correct angles, but I didn't start taking measurements until my robot was at 20 degrees. After I fixed this issue, the localization improved slighty. I also added a delay statement to my code so that the robot could be fully stationary right before the measurement. I think there is a delay between the yaw measurement and the TOF measurement, so the recorded yaw values are actually "lagging" behind the actual yaw. The picture below shows my updated localization. It's still bad but I'll focus on lab 12 first and maybe hit it later.

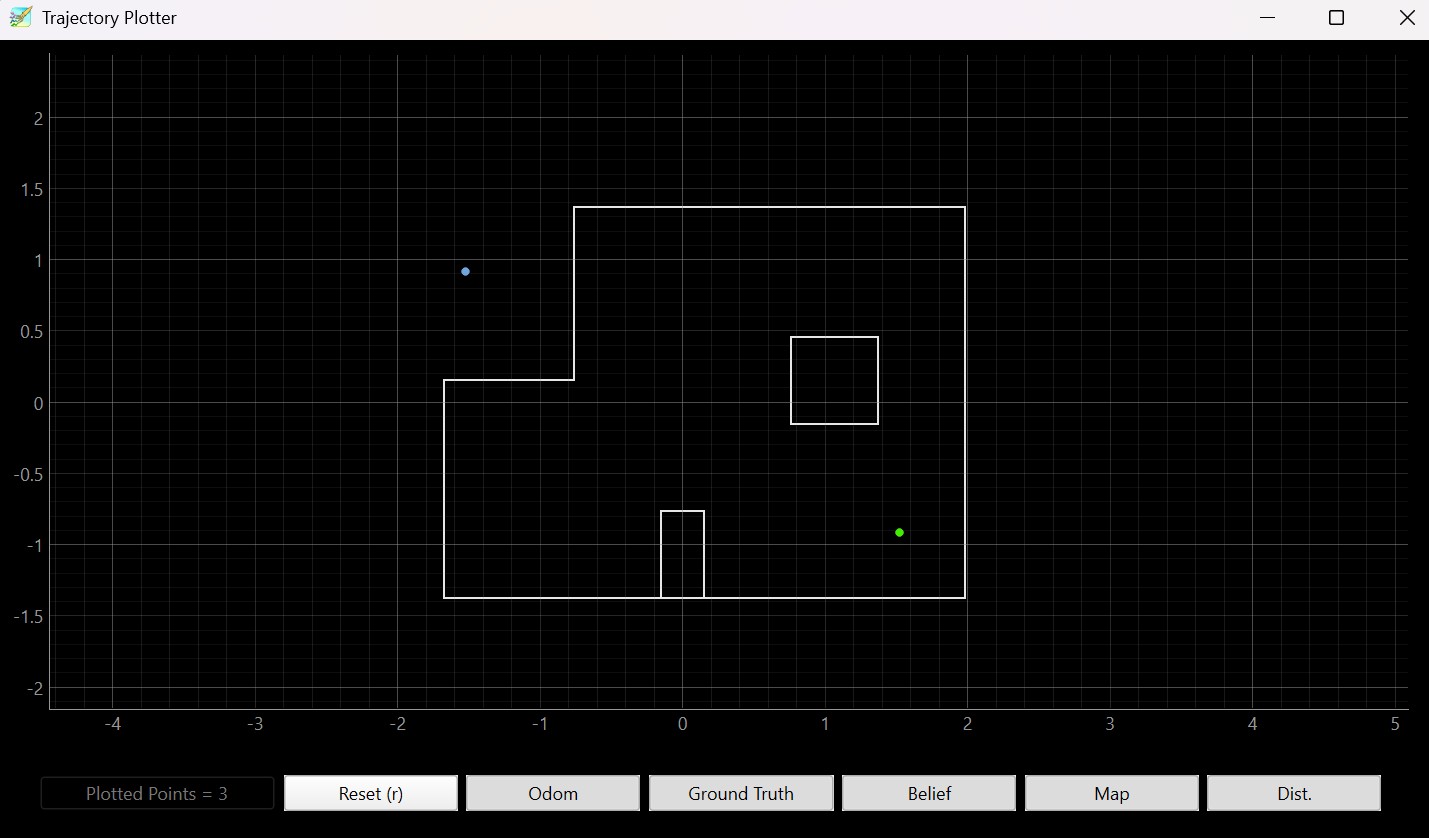

Below are the results from an observation loop. Again, the localization results are awful. I don't think it's a unit conversion or data transmission issue, I might just need to adjust the sensor noise.

It was unfortunate that I lost so many hours to troubleshooting a trivial unit conversion issue. The time used for that would've been enough for me to try to localize the other points and maybe even adjust the sensor noise. I'll probably give it another shot during Wednesday lab.

I referenced Nila's site from last year when figuring out how to implement `perform_observation_loop()`.